Information about Kai-Uwe Carstensen

In other words, I am interested in how language relates to the world via cognitive representations and psychological processes.

I am one of the few who believe, and for more than 30 years now say, that selective attention plays an important role in cognition, much more important than currently acknowledged, most elaborately presented in the Cognitive Processing article shown article above (see also the slides of my talk at the 'Language and Perception' conference in Bern, Switzerland, September 2017).

“The problem of understanding the relation between concepts and perception is about as fundamental as any which we currently face, and the key to it is provided by the notion of selective attention.”

John Campbell

Apart from the philosophical-linguistic problem of reference, there is a recent neuroscientific theory (attention schema theory) that places selective attention at the heart of models of consciousness.

Now that my article Attentional semantics of deictic locatives has appeared in Lingua (Nov. 2023), I am definitely the only one worldwide who has published on the semantics of ALL major classes of spatial expressions and phenomena in corresponding separate, international, peer-reviewed, in-depth publications, based on a modern, semi-formal, cognitivist attentional framework, i.e.,

- Spatial object nouns (Why a hill can't be a valley Carstensen/Simmons paper (1991), Lang/Carstensen/Simmons book (1991)

) and (spatial) upper level ontologies (Cognitive Processing (2011) paper

) and (spatial) upper level ontologies (Cognitive Processing (2011) paper)

- Dimensional adjectives (long, wide, high,..., Lang/Carstensen/Simmons book (1991)

)

) - Distance adjectives and gradation (e.g., more than three feet higher than..., dissertation

and Semantics of gradation paper (2013))

and Semantics of gradation paper (2013)) - Local/locative prepositions (above, behind, on, ..., dissertation

and Attention and meaning book article (2015))

and Attention and meaning book article (2015)) - Directional prepositions and (non-)actual locomotion expressions ((walk/run/...) from, to, through, along, ..., From motion perception to Bob Dylan. A Cognitivist attentional semantics of directionals paper (2019) in Linguistik online)

- Spatial verbs (paper on follow (1995) and Dylan paper (2019))

- Locative deictics/adverbs like here and there (Lingua paper (2023), Pdf of accepted manuscript)

- Route descriptions (Master's thesis (1991)

and ECAI paper (1992))

and ECAI paper (1992))

Plus I have done practical work in spatial computational linguistics by implementing a system for the generation of route descriptions (WebS in the LILOG project), a system for interpreting dimensional designation of objects (OSKAR), and a system that extends OSKAR by adding distance aspects of spatial relations between objects plus gradation aspects (GROBI) (see Projects section).

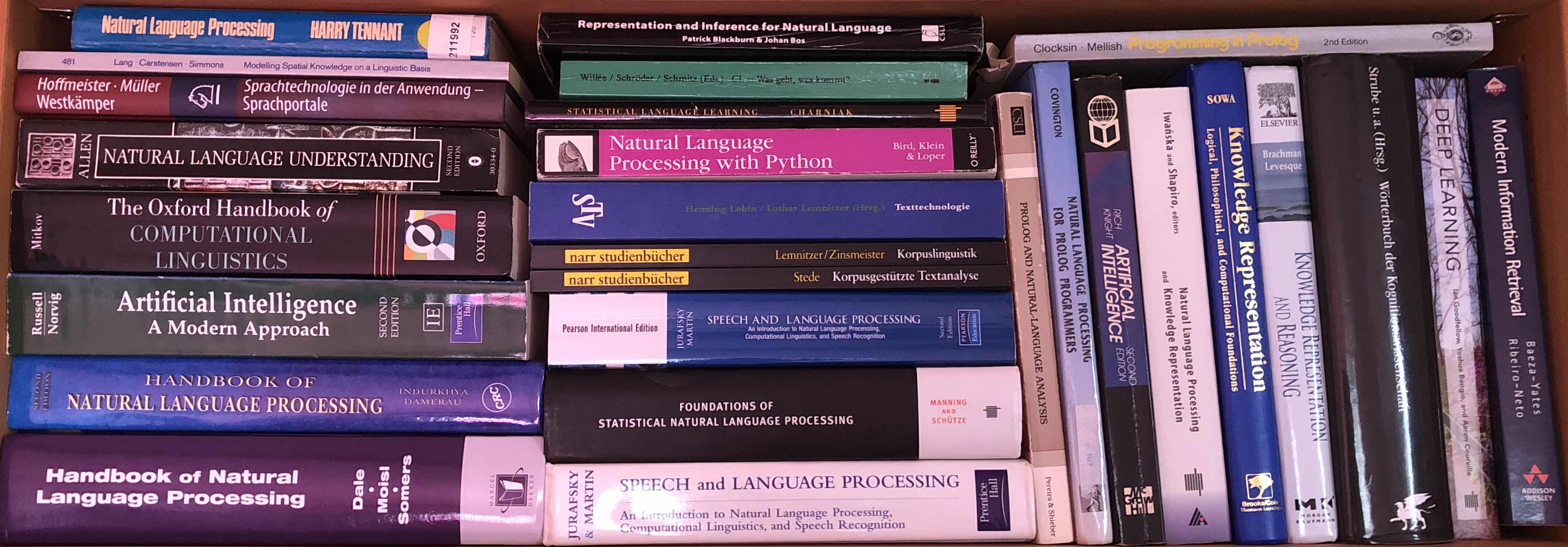

I have always had a great interest in computational linguistics (Computerlinguistik)/ natural language processing (Sprachverarbeitung) and its practical applications, i.e., language technology (Sprachtechnologie).

Ideally, both lines of interest meet when understanding how language functions helps to make better applications. This, actually, seems to be corroborated by recent developments:

“A recent trend in Deep Learning are Attention Mechanisms. [...] Ilya Sutskever, now the research director of OpenAI, mentioned that Attention Mechanisms are one of the most exciting advancements, and that they are here to stay.”

Denny Britz (www.wildml.com/2016/01/attention-and-memory-in-deep-learning-and-nlp/)

In fact, current practical neural network models have greatly benefitted from neuroscientific models of attention, boosting performance, e.g., for computational linguistic applications ("Attention is all you need" (Vaswani et al., 2017, Computation and Language)).

Truth be told: "attention" is highly polysemous, so always have a close look at what is meant in each case!Fun fact for insiders: you'll find no reference to BERT or ERNIE here, but have a look at OSKAR (oscar) and GROBI (grover) in the 'Projects' section.

By the way, given that ChatGPT and corresponding existing/coming apps are the talk of the town in 2023, and that discussions about their impact on our life and education are omnipresent (see, e.g., "ChatGPT for Good? On Opportunities and Challenges of Large Language Models for Education" (Kasneci, E., Seßler, K., Küchemann, S., Bannert, M., Dementieva, D., Fischer, F., … Kasneci, G., 2023 (January 30), Web preprint)), the following quote of my 2003 review has had 20th anniversary:

“Was heute noch als (web-basierte) Multimedia-Applikation zur Vermittlung von Inhalten daherkommt, stellt sich in 20 Jahren vielleicht schon als flexibles Lehrtool dar, das, wenn auch eingeschränkt, in Dialoge mit Lernenden tritt [...] Neben vielen Anwendungsgebieten einer reifen Sprachtechnologie ist Lehre ein Bereich, der für den Einsatz intelligent interagierender tutorieller Werkzeuge besonders breite Perspektiven eröffnet.”

[ “What comes as a (web-based) multimedia application for knowledge transfer today, may present itself in 20 years as a flexible teaching tool entering into dialog with learners – even if restricted in some way [...] Beside many (other) areas of application of a mature language technology, it is especially education that opens up many new vistas for the use of intelligently interacting tutorial tools” ]

(Carstensen 2003c:160)

The slow and late reception of insights about attention has taught me that it may be beneficial or even necessary in some domain to view things differently than usual. Such a change of perspective is what I propose in my Frontiers in Artificial Intelligence: Language and Computation paper "Quantification: the view from natural language generation" (2021) (Pdf, open access link) for aspects of quantification.

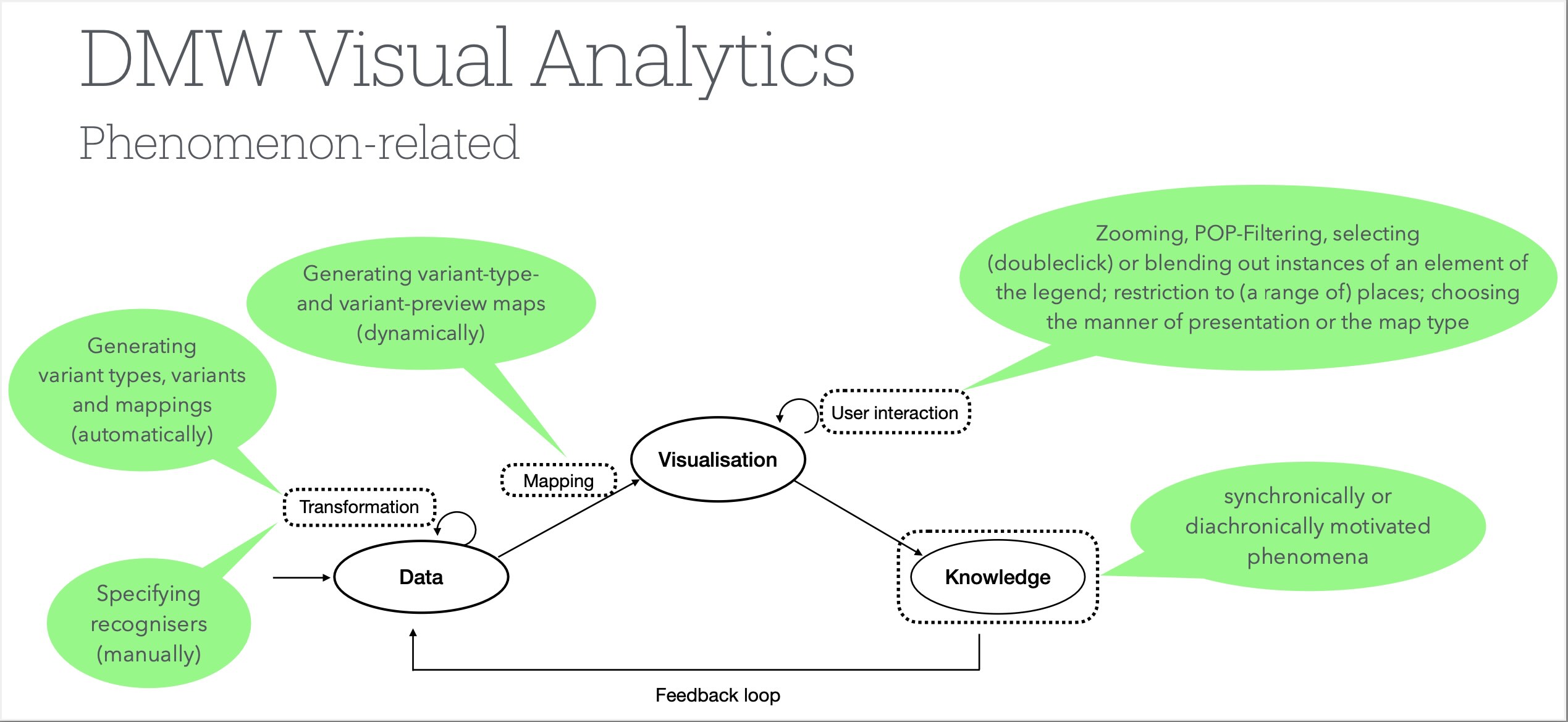

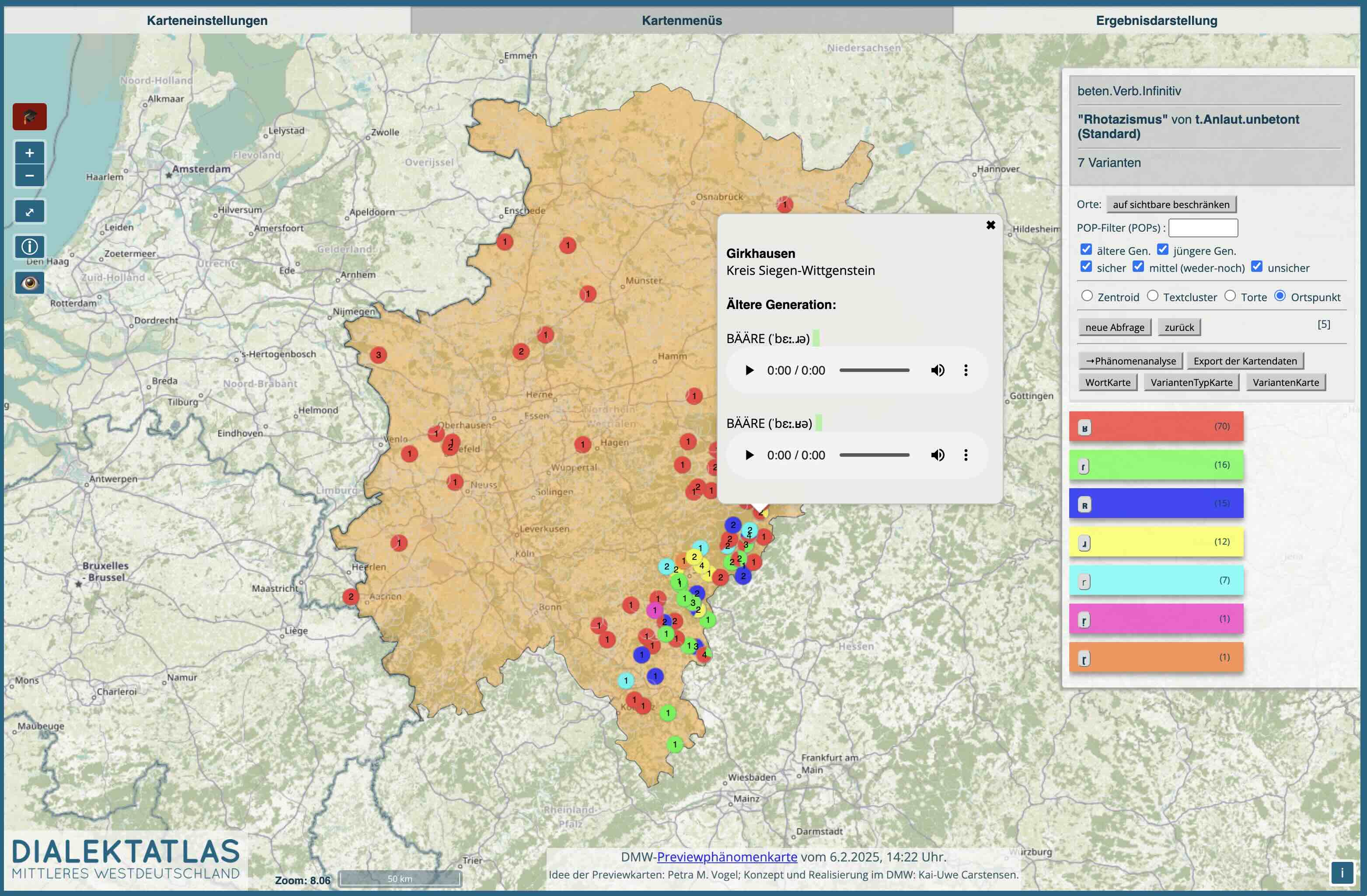

Lately, I have been working on visualizing dialect data in the DMW project. As I have shown in a talk on VisuHu2017 ("Visualization processes in the humanities", Zurich, 2017), aspects of attention are important in this field, too (see the selected slides of the talk). This fact has been recognized, see

Lately, I have been working on visualizing dialect data in the DMW project. As I have shown in a talk on VisuHu2017 ("Visualization processes in the humanities", Zurich, 2017), aspects of attention are important in this field, too (see the selected slides of the talk). This fact has been recognized, see

Perkuhn, Rainer / Kupietz, Marc: Visualisierung als aufmerksamkeitsleitendes Instrument bei der Analyse sehr großer Korpora [Visualization as attention-guiding instrument in the analysis of very big corpora]. In: Bubenhofer, Noah / Kupietz, Marc (Hrsg.): Visualisierung sprachlicher Daten. Visual Linguistics – Praxis – Tools. Heidelberg: Heidelberg University Publishing, 2018.

Usually, it takes decades to produce a comprehensive dialect atlas, as you have to perform thousands of interviews with hundreds of questions in hundreds of places, to analyze (transcribe) the spoken language into phonetic text (e.g., IPA transcripts), and to evaluate the data by experts according to dialectological principles and theoretical phenomena in question. All that before you can even start to print or digitally generate a map.

In this project, we break with this tradition by omitting the evaluation step. This allows us to automatically generate digital maps as soon as analyzed data are given, that is, VERY EARLY! These innovative so-called "preview maps" allow to directly monitor the gathered dialect data and their distribution as "speaking maps", both for experts and the public.

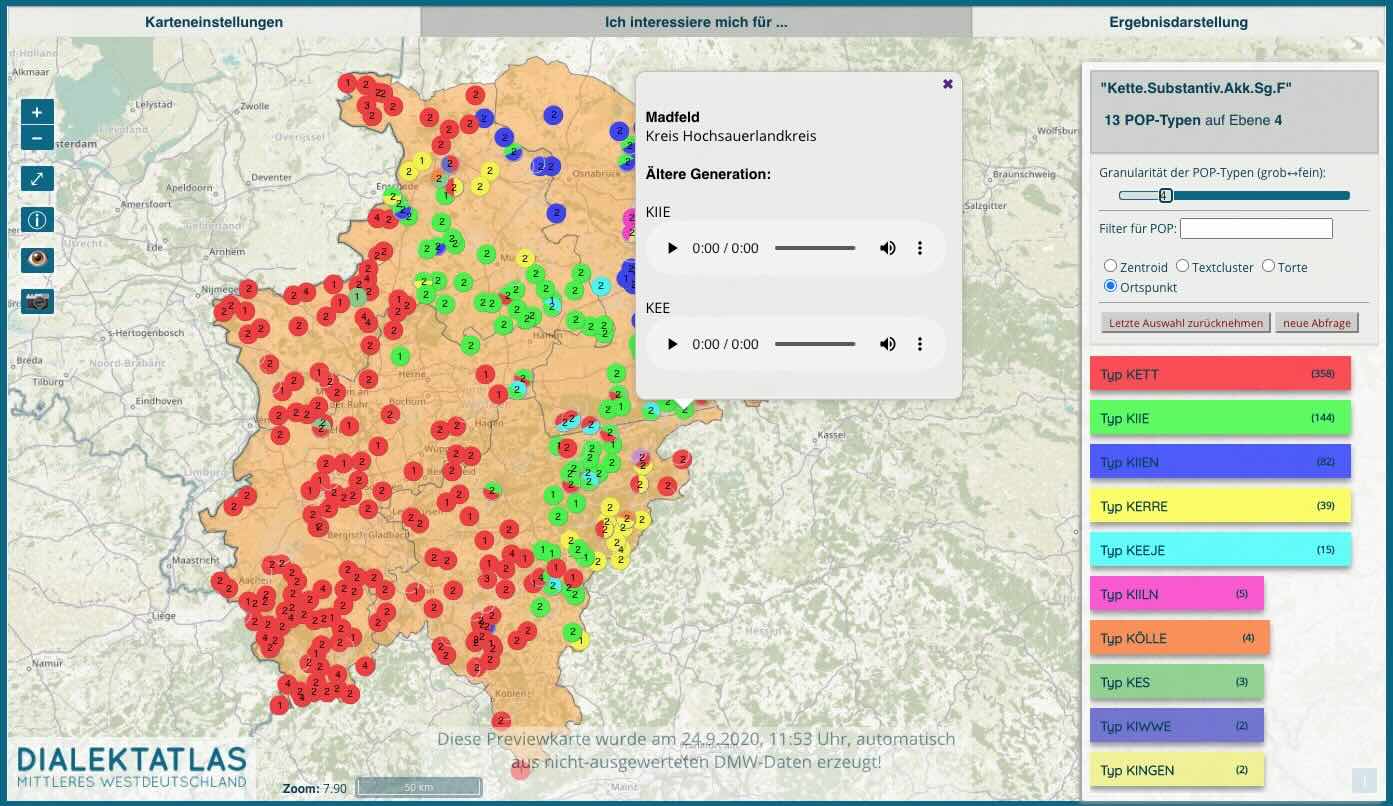

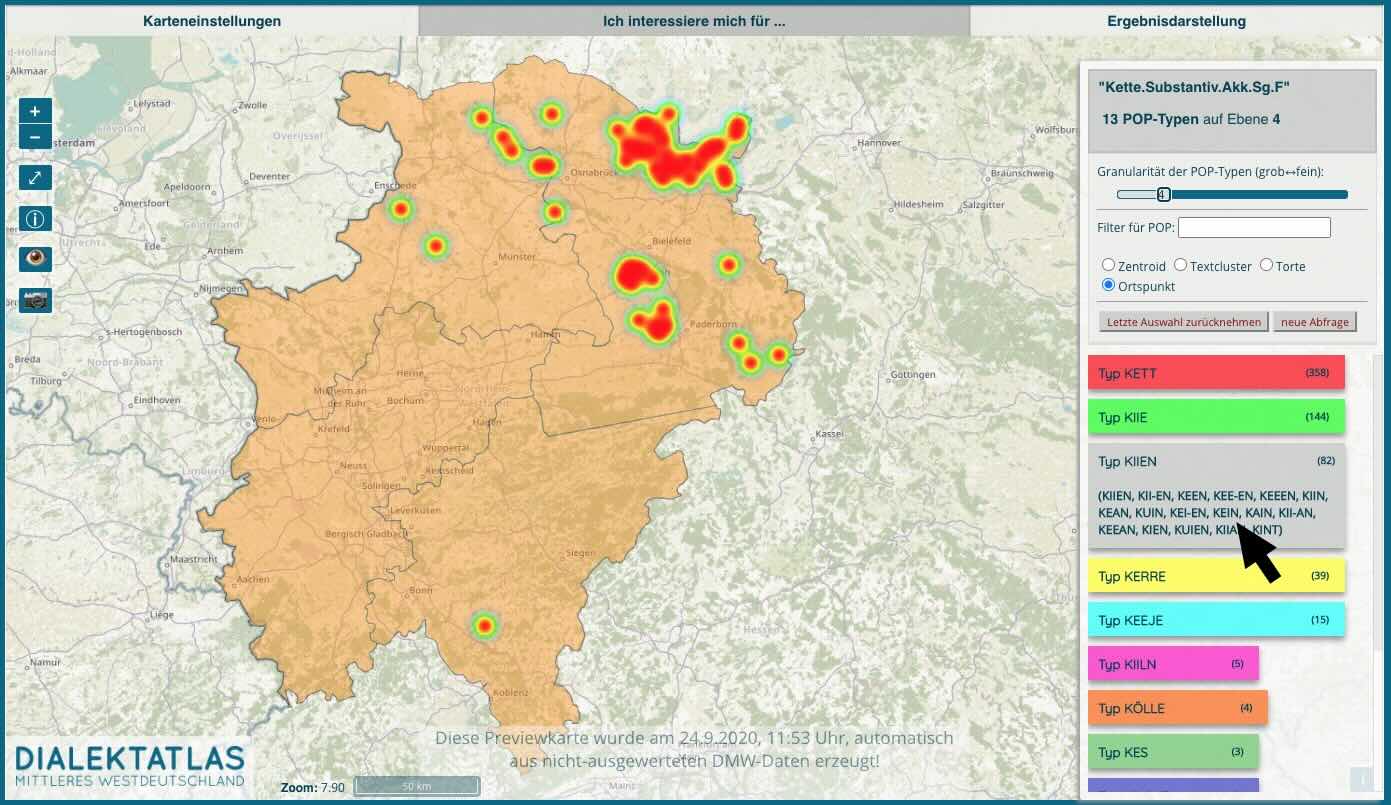

If you think about it, there's only one problem: how to prevent cluttering a dialect map with symbols for dozens (even hundreds) of different IPA types, and, correspondingly, to ensure effective and user-friendly interactive use of such digital maps. I have come up with a satisfactory, innovative solution for this problem (the attention-guidance in automatically generated dialect maps). Have a look at my paper on dialect data visualization in the DMW project (Pdf) (online version). See also the slides of a talk where I present this innovative idea of user-friendly on-the-fly generation of preview dialect maps based on unevaluated data. There's more on this in the project section.

[or go directly to the introduction to current preview phenomenon maps]

Especially in trumpet-loud times like these, I fully agree with the statement made in the song "Den Revolver entsichern" of the Hamburgian band Kettcar:

Keine einfache Lösung haben, ist keine Schwäche [Having no simple solution is no weakness]To put in another aspect: It is no weakness to not invade other sovereign countries. In any case, leaders should not exert force to gild their own weaknesses. It's so distressing.

Die komplexe Welt anerkennen, keine Schwäche [Accepting the complexity of the world is no weakness]

Und einfach mal die Fresse halten, ist keine Schwäche [To simply shut the fuck up is no weakness]

Nicht zu allem eine Meinung haben, keine Schwäche [Not always having an opinion is no weakness]

By the way, I am a big fan of the German neuroscientist/cognitive scientist Maren Urner. She works on the important role of attention in how we perceive, talk about, and act in the world. Her motto: "everything starts within our head". Her goal: to get people to emotionally allow rational behaviour that is needed to solve the problems the world faces. Her proposal: to think positive (attend to positive aspects) and to focus on solutions (rather than only the problems), especially on the social/political/media level.

Here's my essay on scientific reviewing:

If you're interested, here's the children's book I wrote for my little daughter (~age nine years, in German), inspired by our holidays on Iceland some fifteen years ago. Many pictures (~42 Mb)!

C. V.

- studies of Linguistic data processing, Linguistics and Computer Science at the universities of Trier and Hamburg with a focus on Computational Linguistics and language oriented AI.

- Magister Artium (M.A.) in 1991 with a thesis on the cognitive aspects and the automatic generation of route descriptions

- since then researcher and lecturer at

- the Institute of Semantic Information Processing / Cognitive Science (and the 'Computational Linguistics and AI' department) at the University of Osnabrück

- the Institute for Computational Linguistics (IMS) at the University of Stuttgart

- the Institute of Computational Linguistics at the University of Zurich, Switzerland

- the Institute of Linguistics and Computational Linguistics at the University of Bochum

- the Philosophical faculty at the University of Siegen

- Dr.phil. in 1998 with a doctoral thesis on 'Language, Space, and Attention'

- Vertretung der Computerlinguistik-Professur an der Uni Freiburg/Breisgau (Ex- Udo Hahn) (4/2005-3/2007)

Some history

I like to describe myself as standing in several, often conflicting, traditions.Computational linguistics

In 1983, I began studying "Linguistische Datenverarbeitung" ("linguistic data processing") in Trier, which was the first full course of studies about computational linguistics in Germany. After the intermediate examinations, I changed to studying linguistics and informatics in Hamburg, where things looked more interesting. While Trier stayed on the level of linguistic data crunching (which is, as a matter of fact, the current mode), I had become interested in the more cognitively oriented aspects of knowledge and natural language processing as parts of Artificial Intelligence. I also had learnt to value interdisciplinary approaches to language as a part of the cognitive system (see, for example, Terry Winograd (1983)'s "Language as a Cognitive Process").

Allow me to cite Chris Manning from his 2015 Last words in Computational Linguistics 41 (4), p. 706:

“It would be good to return some emphasis within NLP [Natural Language Processing, K.-U.C.] to cognitive and scientific investigation of language rather than almost exclusively using an engineering model of research. [...] I would encourage everyone to think about problems, architectures, cognitive science, and the details of human language, how it is learned, processed, and how it changes, rather than just chasing state-of-the-art numbers on a benchmark task.”And yes, I‘m aware of the fact that this is not en vogue these days.

Cognitive linguistics

My first linguistics teacher in Trier was René Dirven, one of the most active proponents of "Cognitive Linguistics" at that time, at least in Germany, if not in Europe. With L.A.U.(D.)T. (Linguistic Agency of (Duisburg, former) Trier), he widely disseminated the ideas of Fillmore, Langacker, Lakoff, Talmy, and many others by distributing copies of famous papers (books) for little money. He invited Ron Langacker for a lecture series on his "Cognitive grammar", which I attended. Of course, I owned copies of "Foundations of Cognitive Grammar I and II", each with the characteristic orange cover sheet. With my Fromkin/Rodman-style basic knowledge of language, I was blown away by Langacker's totally different cognition-based approach to linguistic argumentation and presentation.

In Dirven's seminars, we would read and discuss all the seminal papers of Cognitive Linguistics at that time. Besides that, I was one of the first to read drafts of Lakoff's "Women, Fire, and Dangerous Things", long before it appeared as a book. In one of the seminars, I researched and wrote a paper on the concepts "trajector" and "landmark" in Cognitive Linguistics. Later, this came in handy when I started to criticize the field: the notions are based mainly on the well-known figure/ground distinction, which is insufficient to explain some of the phenomena I later worked on.

Of course, especially as a computational linguist, I had also become familiar with the Chomskyan view of cognitive linguistics, and later worked rather in the Jackendoffian and Bierwischian tradition. Most importantly, still being a student, I had collaborated with Ewald Lang (translator of Chomsky's "Aspects..." into German, and later director of the center of linguistics (ZAS), Berlin). I had implemented his theory (resulting in a system named OSKAR), and had co-written a paper and a book (see Lang/Carstensen/Simmons 1991) on this successful collaboration. I have always benefitted from this crash course on crystal clear scientific writing.

Yet, none of the linguistic models is perfect, and instead of choosing one side, I always try to integrate the different views (with an emphasis on cognitivism). Fatal irony of that: I'm not recognized/cited by any of these groups.

Language technology: Natural language systems

The second or third book I read in Trier (just after the "Introduction to linguistic data processing") was Harry Tennant (1981)'s "Natural Language Processing: An Introduction to an Emerging Field", which was an overview of the natural language systems up to that time. It felt like a sixth-grade mathematics book for a kindergarten kid for me, but it sparked my interest in the "systems view" on language processing. It also already included the systems implementing aspects of knowledge representation and processing.

After a one-week course on PROgramming in LOGic (PROLOG) in 1985 (?), I decided to build my own system: a system for the generation of route descriptions (that semester, I had participated in a practical seminar on route descriptions). I learned how to represent (macro-)spatial knowledge and how to implement aspects of language production/generation. I succeeded, and continued working on it in the following years (up to my Master's thesis). I ported the system into the big LILOG project I later worked in as a student, and it became part of the LILOG-system prototype that was presented at CeBIT, Hannover (see Research->Projects->LILOG).

In Hamburg, I attended seminars of Walther von Hahn and Wolfgang Hoeppner of the "Natural language systems group" in the informatics department, who had initiated and participated in Germany's biggest dialogue system projects at that time. Later, I continued working on natural language systems, even if only as a heuristic tool in the scientific process. In our introduction to computational linguistics and language technology, I organized the application-oriented parts, and I also wrote my "systems view" introduction to language technology.

I‘m happy to see the current practical developments of llm-based generative AI. I‘d never have expected this to come (so soon). Theoretically, however, this is based on a black-box, behaviorist view of intelligence/language that Chomsky warned us of some 70 years ago (which I am not interested in). Let's see where this leads. And let's hope that we are not effectively acting like the sorcerer's apprentice here.

Language and Space: Spatial semantics

The 1980ies were one of the times when topics that had come up 10 years earlier in the U.S. became prominent in Germany. One of them was "semantics of spatial expressions", which dominated all branches of cognitive linguistics at least in the period between Miller/Johnson-Laird (1976)'s "Language and Perception" and Bloom et al. (eds.)(1996)'s "Language and Space". Most of my scientific life has to do with that topic, starting with Langacker's presentation of his Space Grammar and my oral seminar presentation of Lakoff/Brugman's "Story of over". In Hamburg, I presented the theory of Ewald Lang in a seminar long before I met him and implemented his theory. In the LILOG-Space sub-project of which I became a student member, we worked on semantic and conceptual representations of spatial expressions within a system that was expected to "understand" spatial descriptions. Both my Master's and doctoral thesis involved aspects of spatial semantics, representation, cognition and computation, and today I can say that I am one of few who have worked and published on the spatial semantics of all main parts of speech (nouns, prepositions, adjectives, adverbs, verbs), and both on micro- and macro-space phenomena.

I was the first in Germany and perhaps (one of very few) in the world who realized that representations of space required for AI spatial relations and linguistic semantics require EXPLICIT spatial relations, that the traditional WHAT/WHERE distinction is not sufficient for explaining the phenomena, and that corresponding theories instead need to be based on the engagement of selective attention in the spatial domain (see below and Research->Projects->GROBI).

Knowledge representation (for natural language processing)

Winograd (1972)'s "Understanding natural language" had clearly demonstrated how important internal representations of knowledge about the world are (for natural language processing), which fostered the development of this subfield of (Good Old-Fashioned) Artificial intelligence. I had studied "knowledge and language processing", and later got a position in teaching and researching in this field. This was the time of the "imagery debate" and of "mental models", that is, the kinds of mental codes/formats used for knowledge representation.

Accordingly, the first lecture I gave in 1992 or so was about "Knowledge representation", in which I reviewed the aspects of systems from SHRDLU to Cyc, and the development of representation languages from Schankian Conceptual dependency structures to Brachman's KL-One, the precursor of today's description logics. Here's my German overview article about knowledge representation of our introduction to computational linguistics.

Logic and Programming in logic (PROLOG)

Logic was another big topic in the 1980ies, see for example the meaning of "LILOG": "LInguistic and LOGical methods (for text understanding)". Its use had become the sophisticated method of specifying knowledge representation structures (as opposed to idiosyncratic notations) and for the study of different kinds of reasoning, although it was –as the prototypical GOFAI method– in general already beginning to decline as the main approach in AI at least for practical applications. For high-level natural language processing, the logical approach (including programming in PROLOG) became (and for some time stayed) the accepted style of implementation, especially in computational semantics. I loved to program in PROLOG and did so for my route description system, OSKAR, and GROBI.

And yet, as a Cognitivist, I never adopted the full "logical semantics" philosophy, because I found the ontologies in model-theoretic semantics underdeveloped and naïve (see my papers on "cognitivist ontologies" (2011) and on quantification (2021)).

Ontologies

Ontologies are, IMHO, at the core of natural language processing (and AI, in general). I got to know this topic when we (some student trainees at IBM, Stuttgart) had to develop an ontology for the LILOG system. The single most important insight that I got here was: it's not trivial. It was not until 20 years later that I really worked on that topic again.

Except for the work on spatial object ontologies with OSKAR, see the Why a hill can't be a valley Carstensen/Simmons paper (1991) and the Lecture Notes in AI book (Lang/Carstensen/Simmons 1991)). And the work in the GERHARD project on automatically classifying web pages (pre-Google) based on a formal categorization of entities (the extended universal decimal classification of the ETH Zurich).

My later work is on upper-ontological distinctions (see the 2011 paper on "cognitivist ontologies") important for natural language semantics.

Cognitive Science (German: Kognitionswissenschaft)

Contemporary to my academic education was the rise of Cognitive Science in Germany. Correspondingly, I had breathed in the interdisciplinary spirit inherent in natural language processing and semantics from early on. My Master's thesis was not designed as a system description or particular analysis of some linguistic aspect, but as an investigation of a complex phenomenon (generation of route descriptions) from a Cognitive Science point of view integrating multi-disciplinary (linguistics, psychology, AI) aspects and perspectives.

Later I was active in the German Society of Cognitive Science for a while, and gave the second talk at the first conference of the society in 1994. Unfortunately, there was some under-reception of my attention-oriented research due to some competence lag of the involved people and some other factors.

I also helped establishing the first full course of studies "Cognitive Science" in Germany (see my short international and the longer German program promotion presentations). Also, one of my lectures was an introduction to Cognitive Science (some slides).

Meaning and Cognition

If you look at how the meaning of natural language expressions is investigated in disciplines like philosophy, linguistics, and psychology (or even special subfields like philosophical or formal semantics, or psycholinguistics) you cannot but notice an incomplete, often redundant treatment of the phenomena. Mostly, either the complexities of the linguistic data, or the aspects of meaning as a cognitive phenomenon are disregarded. Instead you often find overly complex, but naïve, formal theories, or simplistic experiments. It is my firm belief that only interdisciplinary approaches within Cognitive Science modelling relevant aspects of meaning and cognition lead to non-trivial progress of the field, cognitivist spatial semantics being a case in point (see Lang/Carstensen/Simmons 1991). But this is the voice of one crying in the wilderness.

In the 1970/80ies, there was a general and hot debate in cognitive science on whether meaning should be specified as in logic (denotational semantics, see Drew McDermott's "No notation without denotation!", as in formal semantics) or handled more laxly (Woods's or Johnson-Laird's procedural semantics). Being aware of the problems of either side, I use to argue for a cognitivist intermediate stance that avoids naïve ontologies and respects the role of cognitive processes. In OSKAR and GROBI, I used procedural semantics to demonstrate aspects of semantic interpretation.

Language generation

Having worked in the field of language generation with my system of generating route descriptions (Master's thesis), I have learned that interpretation and generation can be totally different, but complementary, perspectives on the same thing. For example, when interested in semantics, you might think about the meaning of some term, or about the "when", "why", and "how" the term would be selected for speaking. I also learned that a change of perspective can help in theorizing.

This is what I emphasized in my Frontiers in Artificial Intelligence: Language and Computation paper "Quantification: the view from natural language generation" (2021) (Pdf, open access link) for aspects of quantification.

Interestingly, such a generation-oriented stance toward language and meaning has been recently explicitly expressed by Ray Jackendoff:

“[...] one can now come to view language not as a system that derives meanings from sounds (say prooftheoretically), but rather as a system that expresses meanings, where meanings constitute an independent mental domain – the system of thought.”

from: Ray Jackendoff, 2019, on his Conceptual Semantics

Attention research

Unbeknownst to most, the 1980ies saw the rise of another important topic. Due to technical progress (for example, the development of personal computers and the corresponding opportunities in experimental designs, or imaging methods like PET scans or MRI) and converging research in (computational) neuroscience and neuropsychology, the investigation of selective attention could be brought to another level.

As a student assistant, part of my job was to copy articles out of journals for my professor. Not only did I get to know relevant journals within Cognitive Science, I also got used to scanning their content for some interesting stuff. Especially when doing research for my first dissertation project (I wanted to work on concept learning), I got hooked on the "Experimental Research" psychological journals. When I abandoned the project (for reasons not relevant here) and turned to spatial semantics again, I sooner or later realized that "selective attention" not only was a hot topic (M. Posner), but also part of the solution of the imagery debate (S. Kosslyn), an essential aspect of the cognitive system (L. Barsalou) and interesting from a representational point of view (G. Sperling, D. Kahneman), and a source of explanation for some of the semantic phenomena I was interested in (G. Logan). I came up with a totally new, attentional approach to spatial semantics, as an alternative to both ontologically-naïve formal semantics and imagery-based cognitive semantics.

Again, unfortunately, this research was as well received as, say, e-mobility by German car factories at that time (both things have changed, even if only 30 years later). Luckily, my publication list clearly shows that I was the first in Germany to discover the role of selective attention for (spatial) language (semantics), recognizing and acknowledging the relevance of the work of Langacker, Logan, Posner, Regier, Tomlin, Talmy and others. Plus, I was probably the first who assumed different directionalites of spatial attentional changes in the processing of linguistic spatial relations in an international context (see my 2002 paper of the Language & Space Workshop (2002) and also my Spatial Cognition & Computation (2007) paper). Note that L. Carlson, K. Coventry, S. Garrod, and E. v.d.Zee were participants:

My concept of attentional microperspectivation is not quite reflected in current work investigating attentional changes (and their directionality) in computing spatial relations. So, to all Franconeris/Knoeferles out there: do your homework and learn how to cite! Plus: do not steal ideas that you didn't fully understand, and do not perform irrelevant research resulting from that misunderstanding.

There is a single other (computational) linguist I know of, Silvio Ceccato (1914-1997), who attributed selective attention a similar central and intrinsic role for language, cognition, and AI modeling, more than half a century ago (in the time of 'cybernetics'). Although not as fine-grained as my work (instead coarser and more general as in Talmy's), his "operational linguistics" (see his "Operational Linguistics", Foundations of Language, 1, 1965, pp. 171-188) highlighted the constitutive and constructive role of attention for establishing differential aspects of structure on the theoretical level. I am grateful to Giorgio Marchetti, one of his followers, for pointing me to his work.

Interdisciplinarity

One of the interesting aspects of these traditions is that they converge at points where you wouldn't have expected it. Above all is the role of attention for semantics. But then, psychological research in combination with linguistic data also led me to acknowledge its role for our perception of the world (see the paper on "cognitivist ontologies"). And finally, my work in semantics and on ontology has shown some opportunities to compensate for well-known deficits of logic (especially, first-order logic), see my Frontiers in Artificial Intelligence (2021) paper on why we need a different predicate logic.

It's only that I stopped trying to implement all that (which I liked to do for quite a while).

Completing the overview of my interdisciplinary education and work, here's a list of some events in Germany worth mentioning:

- 1. German full course of study 'Linguistische Datenverarbeitung' ('Computational Linguistics') in Trier (education)

- KIFS (KI-Frühjahrsschule/AI-spring school) 1986 in Dassel (participation)

- foundation of the computational linguistics section of the German Linguistic Society at DGfS 1988 in Bielefeld (presence)

- summer school of the German Linguistic Society 1989 in Hamburg (participation + OSKAR program demo)

- GWAI (German AI conference) 1989 (participation + paper/talk, OSKAR program demo)

- 1. German full course of study 'Computerlinguistik & Künstliche Intelligenz' ('Computational Linguistics and AI') in Osnabrück (teaching)

- 1. KONVENS ('Verarbeitung natürlicher Sprache'/'Processing Natural Language' 1992 in Nürnberg) (participation + paper/talk)

- 'Windows to Cognition' (colloquium of the 'Cognitive Science' graduate college 1993 in Hamburg) (participation + conference report in KI)

- 1. Conference of the German Cognitive Science Society 1994 in Freiburg (participation + talk)

- Workshop 'Wege ins Hirn'/'Paths into the Brain' 1996 at Kloster Seeon (failed attempt by E. Pöppel and others to install a huge interdisciplinary 'leading project' combining Neuroscience, Cognitive Science and AI) (participation + short presentation)

- 1. 'Interdisziplinäres Kolleg' (IK 1997 in Günne) (participation + conference report in Kognitionswissenschaft)

- 1. German full course of study 'Kognitionswissenschaft'/'Cognitive Science' in Osnabrück (coordination, teaching)

- 1. German full course of study 'Linguistische Informatik' (Linguistic Informatics) in Freiburg/Brsg. (teaching)

- 1. two-semester certificate course 'Computational linguistics' (several lectures plus programming course) at the University of Siegen (teaching)

“Droll thing life is – that mysterious arrangement of merciless logic for a futile purpose. The most you can hope from it is some knowledge of yourself – that comes too late – a crop of unextinguishable regrets.”

Joseph Conrad, Heart of Darkness

Publications of Kai-Uwe Carstensen

Here's the list of publications as pdf. You'll find links to most of my papers as pdfs there.Projects

LILOG (1987-1991)

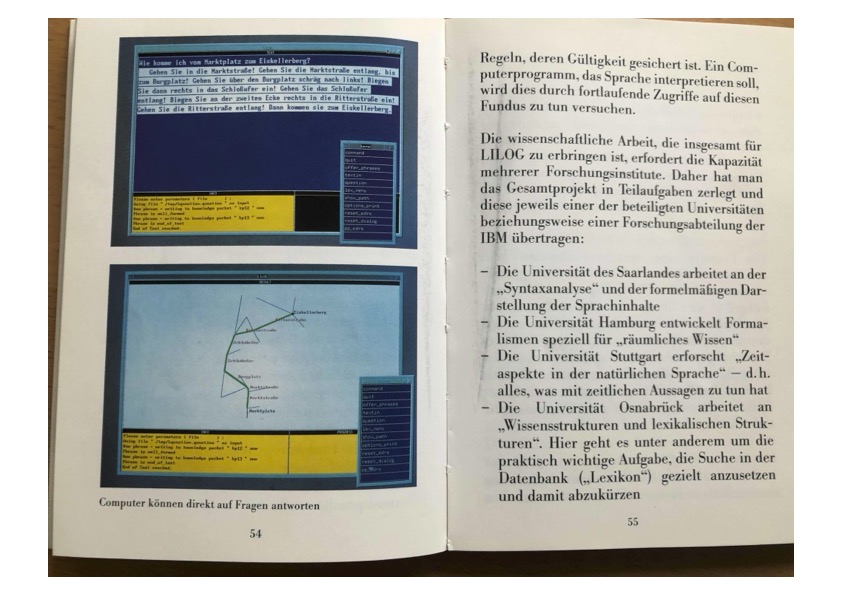

This was a basic research project in which a system for understanding texts was developped (for a survey, see "Text Understanding in LILOG", which also contains the Carstensen/Simmons paper (1991)).Interestingly, I added a component for generating route descriptions to the system (see the following figure on page 54). I did this work as a student of Computational Linguistics and Artificial Intelligence in the subproject LILOG-SPACE at the University of Hamburg.

In the final LILOG system LEU/2 that was presented on CeBIT, Hannover (Germany), 1991, the generation component played a central role in showing the understanding capabilities of the system (read the technical description of the system in the LILOG book article by Jan Wilms, the main software engineer, exemplifying this (p. 714); note the funny mistake in the text (confusing "Anfangspunkt" and "Endpunkt")).

As Andreas Arning, IBM CTO of the project at that time, put it in 2013 in a blog ("https://bertalsblog.blogspot.com/2013/11/andreas-arning-uber-die-praxis-des.html?m=1", retrieved Dec. 2021, my translation):

"If you want, we built the first precursor of today's WATSON system."

(from: Brenner, Robert (1990), IBM als Partner der Wissenschaft, IBM Forum D12-0035; the screenshots show the route description part of the system)

My Master's thesis (1991) was published as an IBM LILOG report:

OSKAR (1989-1990)

OSKAR was a small PROLOG-based prototype I developped for testing and extending Ewald Langs cognitive-semantic theory of dimensional adjectives. It is based on spatial conceptual object schemata as representations of qualitative spatial information. These object schemata are used in OSKAR to determine whether specific combinations of dimensional expressions and object names can be conceptually interpreted (for example, "high hill" and "deep valley" are meaningful expressions, while "deep hill" and "high valley" are not).

In 1988, Ewald (as a scientist/linguist of the socialist German Democratic Republic) had fled the communist system and had stayed in Western Germany. He literally lived out of a suitcase and was in desperate need of a job. There was a connection to the project LILOG in Hamburg (see "Projects"), and I was asked whether I could implement his famous theory (which we had already discussed in seminars). Which I did, successfully, still being a student.

Lang, Ewald and Kai-Uwe Carstensen (1989). “OSKAR - ein Prolog-Programm zur Modellierung der Struktur und Verarbeitung räumlichen Wissens”. In: GWAI (German Workshop on Artificial Intelligence) ’89. Springer Verlag, pp. 234 –243.

Lang, Ewald and Kai-Uwe Carstensen (1990). OSKAR - A Prolog Program for Modelling Dimensional Designation and Positional Variation of Objects in Space. IBM Deutschland GmbH, IWBS-Report 109.

Carstensen, Kai-Uwe and Geoff Simmons (1990). “Representing Properties of Spatial Objects with Objectschemata”. In: Workshop Räumliche Alltagsumgebung des Menschen. Ed. by Wolfgang Hoeppner. Fachbericht Informatik 9. University of Koblenz, pp. 11–20.

Lang, Ewald, Kai-Uwe Carstensen, and Geoffrey Simmons (1991). Modelling Spatial Knowledge on a Linguistic Basis: Theory - Prototype - Integration. Lecture Notes in Artificial Intelligence 481. Springer. isbn: 3-540-53718-X.

Apart from these publications, he and I gave several talks (Annual conference of German linguistic society, German Linguistic summer school, German annual conference of AI (see above) etc.) and presentations of OSKAR.

Let´s put it that way: Our collaboration was not unhelpful for his further career, which led to his becoming director of the ZAS, one of the biggest linguistic institutes in Germany. Yet, as pivotal as it was, it is hardly mentioned in retrospectives (of his peers), see his vita.

SPACENET (1994 - 1998)

SPACENET was an EU funded Human Capital Mobility Network that brought together the eleven major European spatial reasoning groups. The project investigated qualitative aspects of spatial representation and reasoning.I was participating as a member of Christian Freksa's group, then at the University of Hamburg.

GROBI

GROBI was an extension of OSKAR which I developped for my dissertation

thesis "Sprache, Raum, und Aufmerksamkeit [Language, Space, and

Attention]". In addition to object aspects, it

took into account relations between objects, the qualitative designation

of distances, and aspects of gradation (e.g., "x is less than 2 metres higher

(above y) than..."). GROBI thus presented an answer to some problems of the

combinatorics of distance and relation expressions occurring at least in

German (compare also "a few metres behind the house" and ?"a few metres close to

the house"), which I investigated in my thesis.

The theoretical core of the thesis is that changes of (spatial) attention are necessary for the construction of conceptual spatial relations, which has to be reflected in the semantics of corresponding spatial terms.

Here you can find the part (written in German) introducing aspects of spatial attention (see also the bibliography of the thesis).

Some of my later papers are based on (and elaborate) the thesis ( Semantics of gradation paper (2013) and Semantics of locative prepositions paper (2015)).

While other approaches to these phenomena model gradation with sets of objects (intervals), I observed that these only reflect implict(ly represented) aspects of such domains. Therefore I propose to distinguish them from explicit(ly represented) aspects in the form of (changes of) selective attentional engagement to salient entities in cognitive working memories (hence the name "GROBI" derived from "GRaduierung Ohne (direkten) Bezug auf Intervalle" 'gradation without (direct) reference to intervals'). I maintain that such a cognitive account is needed in principle (see the Cognitivist ontologies paper). Furthermore, explicit changes of attention between objects represent different perspectives (so-called microperspectives) on a single implicit relationship, and thus allow finer distinctions needed for the proper treatment of natural language semantic phenomena.

GROBI includes attention-based aspects of linguistic spatial relations (later elaborated in the 2002 and 2015 papers). It emphasizes their perceptual grounding and, in particular, their close coupling to how a speaker conceives a situation retraced by the addressee (I called that "localization as mental presentation"). This is very close to Alistair Knott‘s grounding of linguistic aspects in visual-attentional processing and Michael Tanenhaus‘ close and incremental coupling of language and perception, and is therefore quite different from topological, semantic map approaches to spatial relations (by Regier, Gärdenfors etc.).

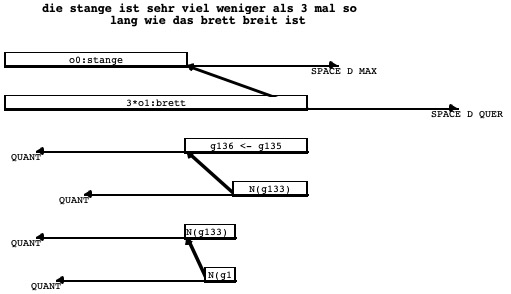

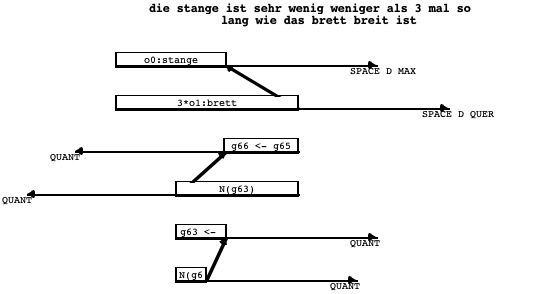

GROBI was a system that automatically parsed gradation expressions, analyzed them and produced a graphical presentation of the implicit and explicit relations (i.e., providing a procedural semantics of spatial expressions). All based on the general attention-based theory and specific semantic proposals, meant as a verifiable automatic proof of concept, computation and (correct) analysis.

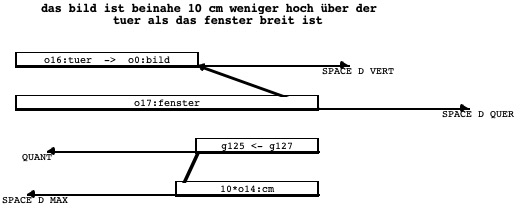

An example is given in the following GROBI-generated image that shows the cognitive semantic denotation for the sentence "The pole (Stange) is very much less (sehr viel weniger) than three times as long as the board (Brett) is wide (breit)" (unnatural complexity chosen just for the sake of presentation).

Rectangles represent the implicit extents aligned on their corresponding scales (necessary for comparison), non-overlapping only for presentative reasons. The oblique arrows represent explicit relationships between boundaries of extents. Some marginal imperfect aspects of the graphics (e.g., partial labelling) need to be excused. Note the difference to the following analysis for the German sentence of "The pole is very little less than ...".

The next picture shows GROBI's output for a combination of spatial relation and dimensional extent measurements ("The picture (bild) is almost (beinahe) 10 cm less high above the door (über der tuer) than the window (fenster) is wide").

There are other researchers interested in the role of attention in cognition and on attentional semantics, some of which are listed on a website maintained by Giorgio Marchetti (or go directly to his website of the book Attention and Meaning. The Attentional Basis of Meaning).

GERHARD I (10/1996 - 3/98)

The GERHARD-project (German Harvest Automated Retrieval and Directory) aimed at classifying texts on the web and their later retrieval according to topic-oriented queries. In this project, we used the Universal Decimal Classification (UDC) of the ETH Zürich to map natural language texts onto categories of the UDC-hierarchy (see a short German public presentation).Here´s a quote from c't magazin (13/98)

GERHARD kann jedoch noch mehr: es ist derzeit die einzige Suchmaschine weltweit, die aus den Dokumenten automatisch einen nach Themengebieten geordneten Katalog erzeugt. Das Programm analysiert den Volltext und kategorisiert die Dokumente nach der dreisprachigen universalen Dezimalklassifikation der ETH Zürich (UDK). Das UDK-Lexikon enthält zur Zeit rund 70 000 Einträge.

[ “GERHARD can do even more: it is currently the only search engine worldwide that automatically generates a catalogue ordered by topics from the documents given. The program analyzes the fulltext and categorizes the documents according to the trilingual universal decimal classification (UDC) of the ETH Zurich. At this time, the lexicon of the UDC contains around 70 000 entries. ” ]

International Bachelor-Master-Programme Cognitive Science (-9/99)

Since the winter semester 1998/1999, a new international Bachelor-Master-Programme Cognitive Science has been established at the University of Osnabrück. In this context, I worked as an official coordinator.

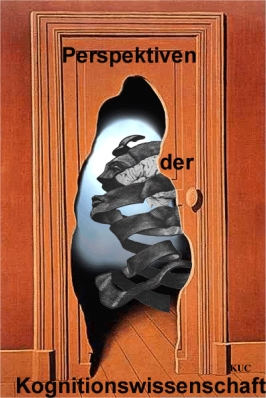

Here´s a poster I created, intended to demonstrate the perspectives (taken literally) of the then quite young discipline:

that unravelling (aspects of) the brain will shed light on aspects of the mind (-body problem).

I used this poster in presentations and in talks given - among others - in Athens, Hongkong, and Warschau (see also my short international and the longer German program promotion presentations).

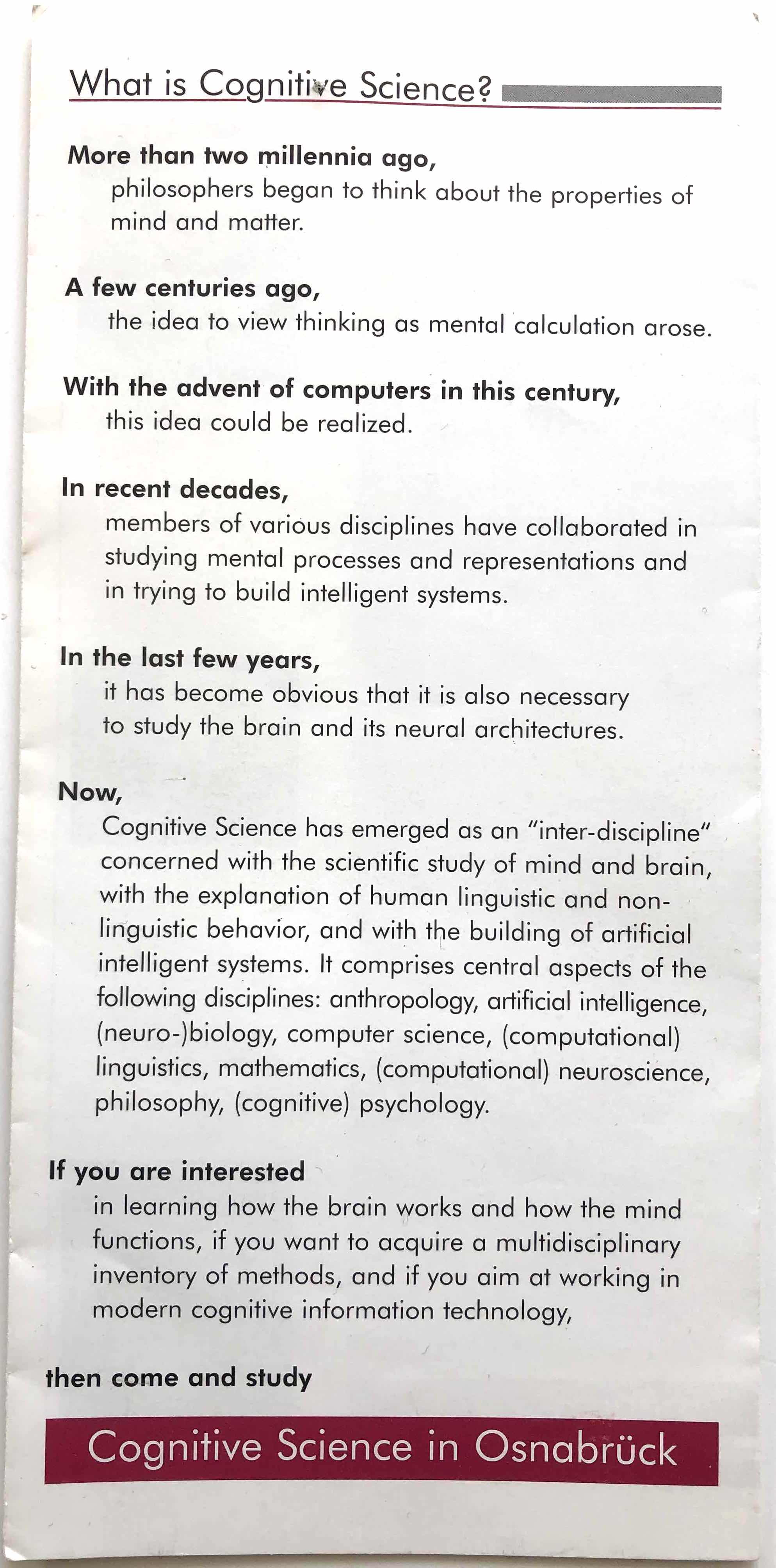

↓ Have a look at the description of Cognitive Science I created for the advertising brochure at that time.

20 years later, this text has still been used in some version (see the web page of the Institute of Cognitive Science in 2019 below).

As I write in my Personal View on Reviewing (p. 42f.):

“When in the mid-1990ies there was a structural change to come at the university of Osnabrück, where I worked, and everyone complained about the restructuring and financial cutting to be expected, I was the only one who stood up in a meeting and pointed to the positive perspectives of that situation. When sometime afterwards my boss gave me a brochure of the DAAD about funding new international courses of studies in Germany ("take a look at that"), it was about five minutes later that I ran back to him saying that we definitely should apply. Now, Osnabrück has Cognitive Science as an international interdisciplinary course of studies, and a corresponding flagship institute with a 50+ people staff. What do you know!”

Computational Semantics (-12/2000)

This was a project in the group of Hans Kamp at the IMS (Institut für Maschinelle Sprachverarbeitung (Institute for Natural Language Processing)), Stuttgart, of which I was a member in the final year.What I did was some kind of 'proof-of-concept' implementation of discourse interpretation theories in the context of segmented underspecified discourse representation structures, including some use of constraint logic programming for solving temporal constraint problems.

Have a look at the paper on computing discourse relations I wrote at the end of the project.

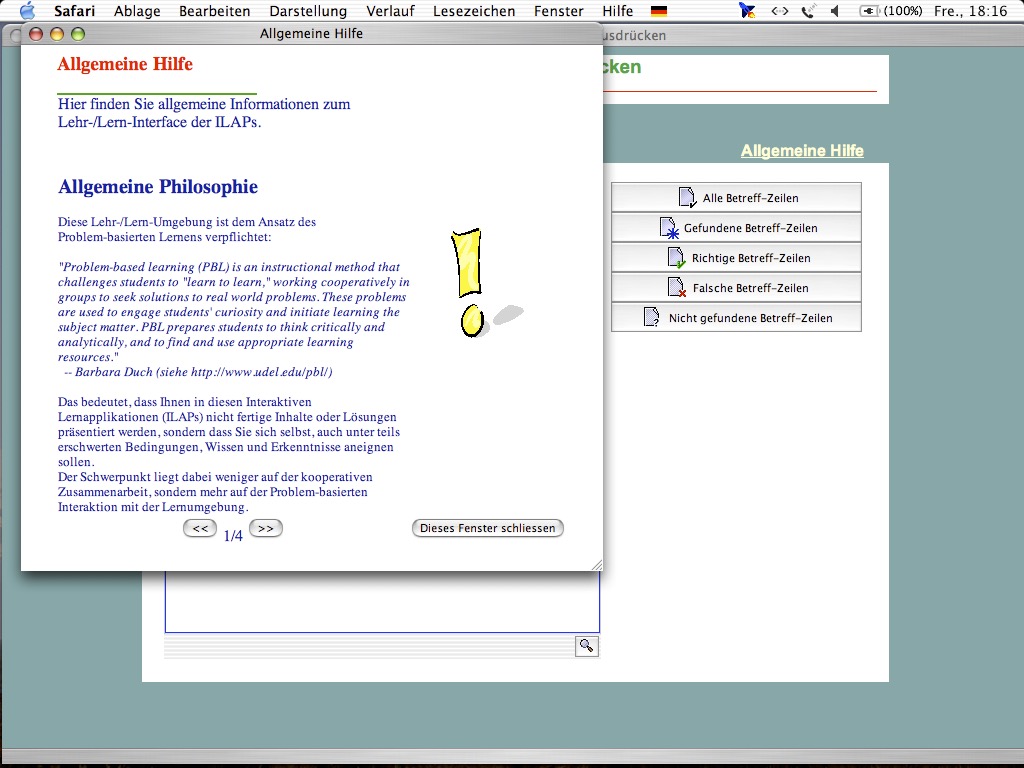

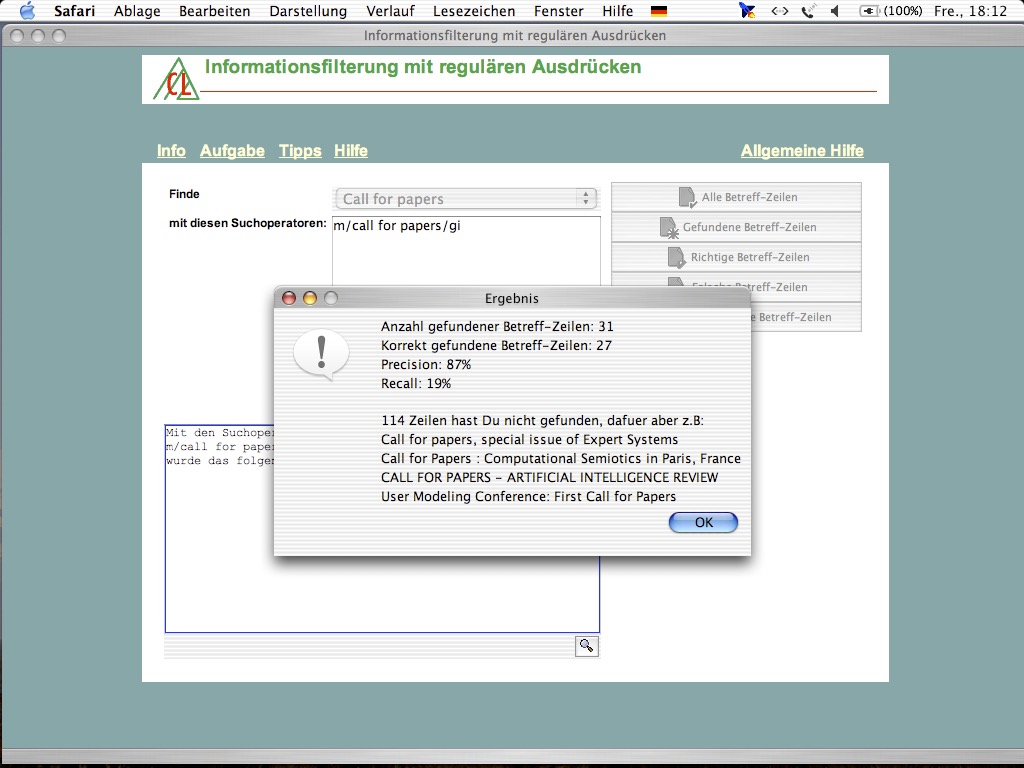

WebCL: Web-based problem-based teaching and tutoring of introductory Computational Linguistics courses

In this project at the University of Zurich, we combined enhanced traditional, but electronic, lecture notes, Web-based computer aided learning and the idea of Problem-based learning to yield a new approach, TIP (for 'Text-centred concept for Individual learning featuring Problem-based interactive learning applications'), aimed at supporting introductory Computational Linguistics courses.Have a look at the volume of Linguistik online on "Learning and teaching (in) Computational Linguistics" I have edited, with a paper of Carstensen/Hess (2003) on that topic.

Here‘s an example for the browser-based German problem-based interactive learning applications:

The TIP approach has been successfully developed further at the University of Zurich as a corresponding learning platform (CLab) of the computational linguistics group, see Clematide et al. (2007) (Clematide, S; Amsler, M; Roth, S; Thöny, L; Bünzli, A (2007). CLab - eine web-basierte interaktive Lernplattform für Studierende der Computerlinguistik. In: DeLFI 2007, 5. e-Learning Fachtagung Informatik, 17.-20. September 2007, Siegen, Germany, 2007 - 2007, 301-302.).

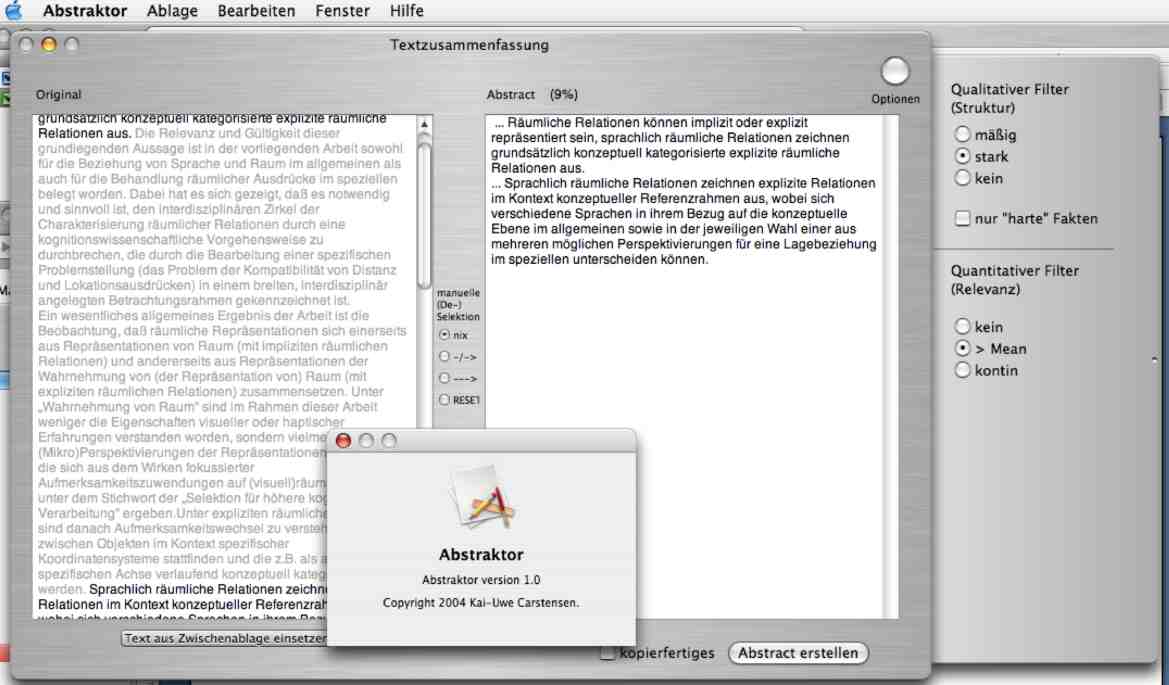

Computer-aided summarization of text (CAST)

This was a small prototype I developed in Python for MacOSX (with PyObjC). Intended to provide several adjustable filters with an additional one-click facility to add/delete a clause to/from the summary.

Dialektatlas Mittleres Westdeutschland (DMW)

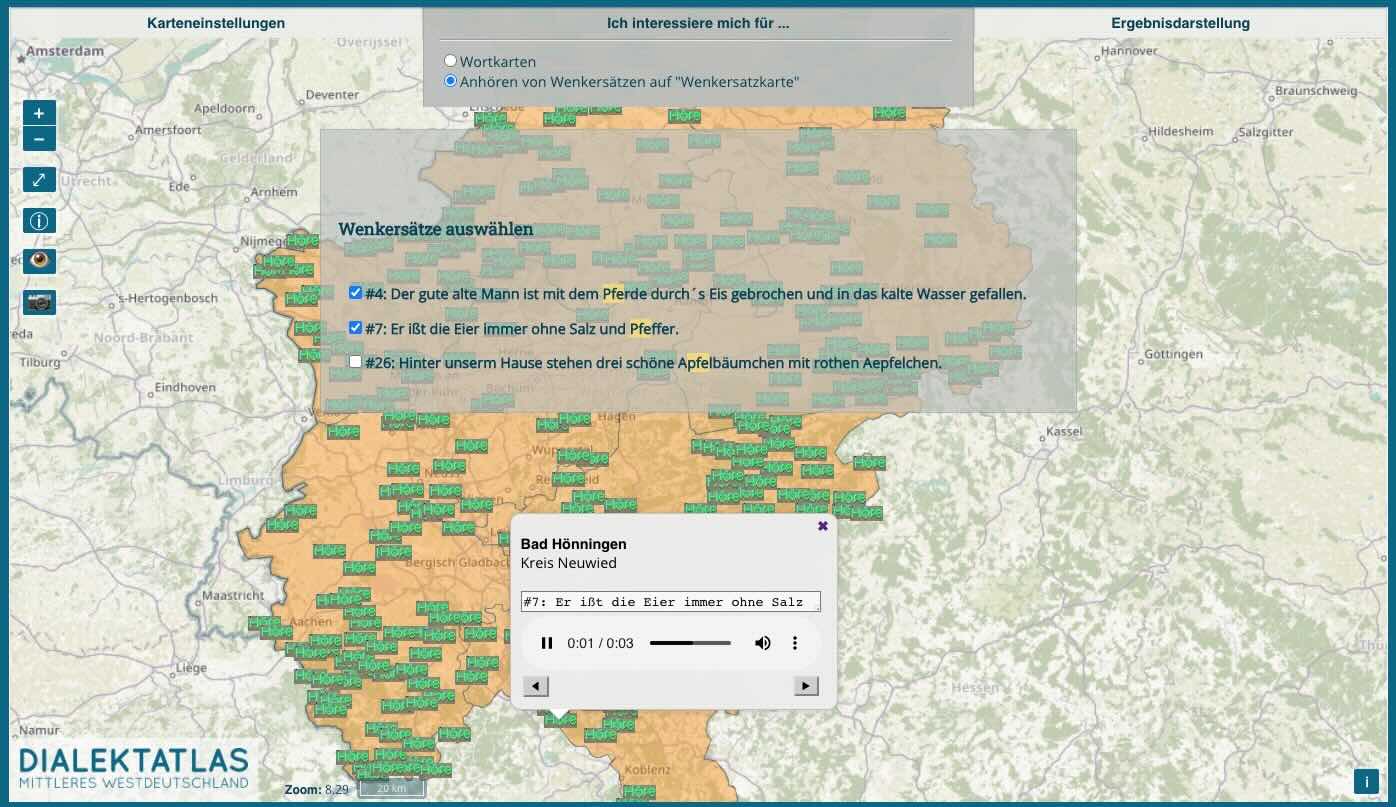

In this 17+-year long (2016-) project financed by the Academy of Sciences of Northrhine-Westfalia, we collect spoken dialect data in and around Northrhine-Westfalia and analyze them wrt. a set of phenomena (roughly, 3000 speakers at 800+ places; 600+ verbal tasks each; often more than one phenomenon per task), all stored in a database.The goal is to develop a digital dialect atlas, a web interface to the database that will dynamically generate different geo-referenced maps, with a click-to-hear option of the dialect utterance at some place, given some query (so-called "Speaking atlas"). Since 2021, the atlas offers preview word maps (see the comprehensive technical description in my paper on preview word map generation in the DMW project). This is because as an academy project, it is primarily geared toward the public (i.e., lay users). As of 2025, the atlas also contains theoretically motivated preview maps ("preview phenomenon maps"). Only later, classical dialect maps will be produced.

Most people (according to my experience, especially old-generation dialectologists) don't immediately get the point that "preview map" is an innovative concept that differs in various respects from usual dialect data visualizations.

- Preview word maps automatically portray unevaluated data, while usual dialect atlas maps portray the results of manual, intellectual evaluation (sorting, categorizing the transcriptions and naming the categories to be presented). Because of that, they do not show boundaries, marked regions, exceptions etc., and they do not contain annotations/comments.

- The preview maps can be generated dynamically/on-line as soon as there are data in the dabase. That is, most of the time they present a partial view (i.e., only a "preview") of the actual data distribution in the region, which is incompatible with the concept of a classical dialect map.

- Therefore, the automatic visualization has to cope with vast numbers of dialectal pronunciations of words (for our current preview word maps): how do you present such a mess to a (lay) user who wants to easily identify similarities and differences, rather than being faced with the pronunciation types themselves?

- Preview maps share the problem of continuous variation with dialectometry but only little more. Often, dialectometric maps are incompatible with user-friendly maps, and they may even be based on evaluated data.

- In our preview word maps, we use an innovative, specifically tailored mechanism for the task of automatic categorization (that determines the legend of the maps and the colouring). For different reasons, we deliberatively DID NOT use the Levenshtein algorithm some people reckon to be the Holy Grail for this kind of clustering tasks (these people can be VERY religious about that (inquisition-style), despite the fact that it would be the proverbial taking a sledgehammer to crack a nut to do it).

The DMW project is one of the eHumanities projects of the Academy of Sciences of Northrhine-Westfalia, see a screenshot of a corresponding page from 2020:

In a description of the project on an academy page it says (still as of 2025):

Das dynamische Konzept wird ermöglichen, für jede Nutzeranfrage individuelle Kartenansichten zu erzeugen. Petra Vogel: „Wir wollen Pionierarbeit leisten und den ersten wirklich interaktiven, leicht zugänglichen Dialektatlas im Web bereitstellen.Even if you don't understand German, you will notice the "Online", "Software", "Open Access/Data", "dynamisch", "interaktiv", "Web" words, which characterizes it as a digital project!

The next two pictures give an impression how this looks like, the third shows a different kind of maps (where the so-called "Wenker sentences" can be selected and listened to at some place).

As a computational linguist, I designed the system and the tools, and tried to coordinate the information flow between the informatics department and the linguists at the participating universities (Bonn, Münster, Paderborn, Siegen) (homepage of the project). I always respected insights of attention theory and visual analytics in the development of such complex interactive maps. The technical aspects (and most of the programming) are handled by members of Siegen's informatics department, by the way.

Have a look at the abstract of an early talk about the visualization aspects of the project at VisuHu2017 ("Visualization processes in the humanities"), Zurich, or at the selected slides of my talk, where general aspects and first developments were presented (here are more recent slides (2021) of a talk presenting the innovative idea of user-friendly on-the-fly generation of preview dialect maps based on unevaluated data).

As to presentations of DMW preview maps, in general, Dyson's slogan applies metaphorically: Buy direct from the people who made it.

Reader, if you have come this far, I can tell you that the current system version includes an "expert mode" of the DMW map interface I have developed (not fully tested, though, hence problems may occur), where you can process our data with respect to some hundreds of overall 1200 phenomena, and where you can generate phenomena-related preview maps showing the distribution of (categorized) variants according to the specifications made in a dedicated interface for the analysis of phenomena in the DMW. This is done according to the scheme of visual analytics (introduced in the 2021 talk slides), have a look:

The following picture shows a phenomenon preview map of "rhotacism" of /t/ in beten.

There is a short intro to the DMW phenomena preview maps you might want to take a look at. I am not member of the project anymore, by the way.

Cognitivist Ontologies (CogOnt)

On that basis, an attention-based cognitivist semantics of linguistic expressions (gradation expressions, Carstensen 2013 Paper; locative prepositions, Carstensen 2015, in a Pdf of the whole book; directional prepositions and verbs, From motion perception to Bob Dylan paper (2019), slides of the talk on that topic ('Language and Perception' conference in Bern, Switzerland, September 2017)) can be specified.

Some have asked me why I use the term Cognitivist so prominently and often. The answer has two parts. First, there is a certain general connection: A while ago, I found a proposal from 1998 for a 'Meaning and cognition' research group on my computer (not written by me). It suggests a non-specific interpretation:

It will be convenient to have a name for the general approach outlined above; we will refer to it as the 'cognitivist' approach, because we believe that the essential trait that most recent semantic theories have in common is that they share a broadly cognitivist outlook. 'Cognitivist semantics', as we use the term, denotes neither a school, nor even a set of premises shared by a given range of theories. The term is merely to suggest a certain trend in semantic theory which we endorse, and which stands in marked contrast to the views underlying most philosophical approaches to semantics.Yet it is already connected to me, as one branch of relevant theories was referred to as follows (in which the publication of my dissertation thesis was still forthcoming)(my emphasis):

Semantic theories developed within the framework of cognitive linguistics such as Jackendoff (1983), Herskovits (1986), Lakoff (1987), Habel (1988), Habel, Kanngießer, and Rickheit (1996), and Carstensen (forthcoming). The work of Bierwisch and his associates may be seen as part of these developments, too (Bierwisch 1983, Bierwisch and Lang 1987).The second part of the answer is that since 2011, I use this term non-generically to refer to my attentional approach to semantics and ontology, different aspects of which are addressed in my recent papers. Accordingly, Cognitivism not only refers to a cognitive position, but more specifically to an alternative theoretical stance on ontology (as opposed to, e.g., Conceptualism and Realism), based on recent insights gained in Cognitive Science.

Cognitivist ontologies and semantics are also relevant for the development of logics. Read my Frontiers in AI paper (2021) (open access link) on quantification.

By the way, the whole idea of Carstensen (2011) is based on psychological observations in the local/global perception paradigm. Personally, I reckon these observations to be as interesting and important as the discovery of mirror neurons. But hey, you know, ...

Courses/Teaching

- Lectures

- Foundations of Cognitive Science (some of my slides)

- Knowledge representation

- Natürlichsprachliche Systeme/Anwendungen der Sprachtechnologie

(Natural Language Systems/ Applications of Language Technology) - Grundlagen der Computerlinguistik/Sprachverarbeitung

(Introduction to Computational Linguistics / Natural Language Processing) - I: Korpora und flache Sprachverarbeitung (Corpora and shallow processing)

- II: Tiefe Sprachverarbeitung (Deep processing)

- Formale Grundlagen (Foundations of logic)

- Einführung in die Germanistische Linguistik (Introduction to linguistics)

- Seminars

- Language and Space and the semantics of spatial expressions (location and gradation)

- Language/Text generation and the generation of route directions

- Knowledge representation, e.g. this one

- Text summarization

- Semantics

- Formal semantics (Heim/Kratzer-style)

- Computational semantics (Blackburn/Bos-style)

- Ontological semantics

- Cognitive semantics

- General introduction to semantics (both formal and cognitive, Löbner-style)

- Pragmatik (Pragmatics)

- Spracherwerb (Language acquisition)

- Morphologie (Morphology)

- Textlinguistik (Text linguistics)

- Practical courses

- Practical course to Lecture "Introduction to Artificial Intelligence"

- Introduction to PROLOG

- Elementary introduction to Python

Sprachtechnologie / language technology

Co-Editing the German Introduction to Computational Linguistics and Language Technology (Computerlinguistik und Sprachtechnologie - eine Einführung)

Click on a picture to get to the homepage of the respective edition.

I am also reviewer of the German handbook of Artificial Intelligence ("Handbuch der Künstlichen Intelligenz") (for the natural language processing part), and was one of the many mentioned in the preface of the world's most prominent introduction to speech and language processing by Dan Jurafsky and James Martin (2nd ed.).

Language Technology - A survey (Sprachtechnologie - ein Überblick)

Based on my lectures on Natural Language Systems and Language Technology, I have written a small online-book in German on the main applications/system types of computational linguistics. Written between 2007 and 2009, it does not reflect current techniques, but provides an overview of historical developments, and of the goals and problems of the field. However, there are some epilogs with remarks on recent developments.

Click on the fake book cover to download a pdf.

Über Anmerkungen/Feedback würde ich mich freuen.

By the way (another fun fact), this intro was referenced in the overview of computational linguistics in the German handbook of cognitive science. Nice to be put amongst 1.5 dozen of the most famous people of our discipline(s), have a look:

Minor point: If you're interested, here is my very short assessment of DeepL's abilities (2022).

News and scientific stuff

- The articles on spatial semantics in WSK (see below) are now online! Check out the collection on De Gruyter Brill.

- (early 2025:) I have just published 500+ modifiable dynamic preview phenomenon maps (of dialectological phenomena collected by our dialectologists) in the map interface of the DMW project! They can be generated with the new interface for computer-assisted analysis of (mostly phonetic) dialect phenomena made by yours truly.

In each case, the specification of variants and variant types is just a proposal. Feel free to modify the phenomenon specs to create your own maps!

Read the short intro of how to access and use the new DMW map interface for experts. Visit the DMW project's homepage and have fun exploring West-German non-standard language!

See also: - My paper on generating preview word maps in the DMW project (Pdf) (September 2022)

- Slides of a talk presenting the innovative idea of user-friendly on-the-fly generation of preview dialect maps based on unevaluated data.

(end of 2024) I've been invited to write 24 articles on "Raumsemantik" 'spatial semantics' in the WSK Semantik und Pragmatik 'Dictionary Language and Communication: Semantics and Pragmatics'. For me, this topic of introducing spatial semantics has somehow come full circle (see Vater 1996's introduction to "Raum-Linguistik" – the first and only introduction to spatial linguistics/semantics as a book in Germany – on the right; he was the one who published my dissertation thesis).

(end of 2024) I've been invited to write 24 articles on "Raumsemantik" 'spatial semantics' in the WSK Semantik und Pragmatik 'Dictionary Language and Communication: Semantics and Pragmatics'. For me, this topic of introducing spatial semantics has somehow come full circle (see Vater 1996's introduction to "Raum-Linguistik" – the first and only introduction to spatial linguistics/semantics as a book in Germany – on the right; he was the one who published my dissertation thesis).- (2024)[Some German here] The collection of articles on the Generic Masculine as part of the gender debate is published! Have a look at my contribution "Das generische Maskulinum – rational betrachtet" (Pdf) to the open access book.

Wer aufpasst, wird feststellen, dass es mir nur vordergründig um das generische Maskulinum geht. Eher darum, dass einige ihre (Macht-)Position dazu gebrauchen, ihre halbgaren bis falschen Meinungen als vernünftige Ansichten zu verkaufen, und dass andere das auch noch gutheißen, was insgesamt vernünftige Debatten in verschiedensten Bereichen zunehmend beeinträchtigt.

Einige Wiederherstellungen unerlaubt vorgenommener Modifikationen haben es nicht in die Druckversion geschafft, deswegen ist das Artikel-Pdf hier die von mir autorisierte Version. - My article Attentional semantics of deictic locatives has appeared in Lingua (Nov. 2023). See what this means in the "About me" section.

-

Can you believe it? I finally published my paper co-authored by Bob Dylan, have a look:

Haha, just kidding. The paper and the title are real. Bob Dylan as co-author, and the category, however, are automatically constructed by Semantic Scholar from which this is copied. THAT would have been a coup if he had joined me in writing about directionals. You know, how many roads must a man walk down... :-) - Read also

- my article Quantification: The view from generation in Frontiers in Artificial Intelligence – Language and Computation (2021)(Pdf).

- my rules for reviewing (caution: satire) and A Personal View on Reviewing. Have fun reading! Comments are welcome!

Other stuff

- In case you didn't know: I love contrasts. Sea (where I come from) vs. mountains (where I lived for a while) is one of them. The picture of the mountains (Eiger, Mönch, Jungfrau) was taken by me only a few kilometres away from the place where I lived (admittedly, it was very good weather that day).

-

If you wondered why I am so interested in spatial semantics, here's why: It's all about the essential questions in life: where you are, where you're at, and why ;-)

By the way, I just published a paper on "Where is ‘here‘", i.e., on the meaning of such deictic terms (see the Lingua paper (2023) (Pdf of accepted manuscript)).

- Never thought that anything could top my sympathy for the "people watching" song/video ("Ankunftshalle") by Kettcar. But here is Sam Fender's "People watching", which just blew me away! These songs/videos are such a pleasant contrast to how those hoggish people out there are acting these days. Let's flood the zone with empathy!